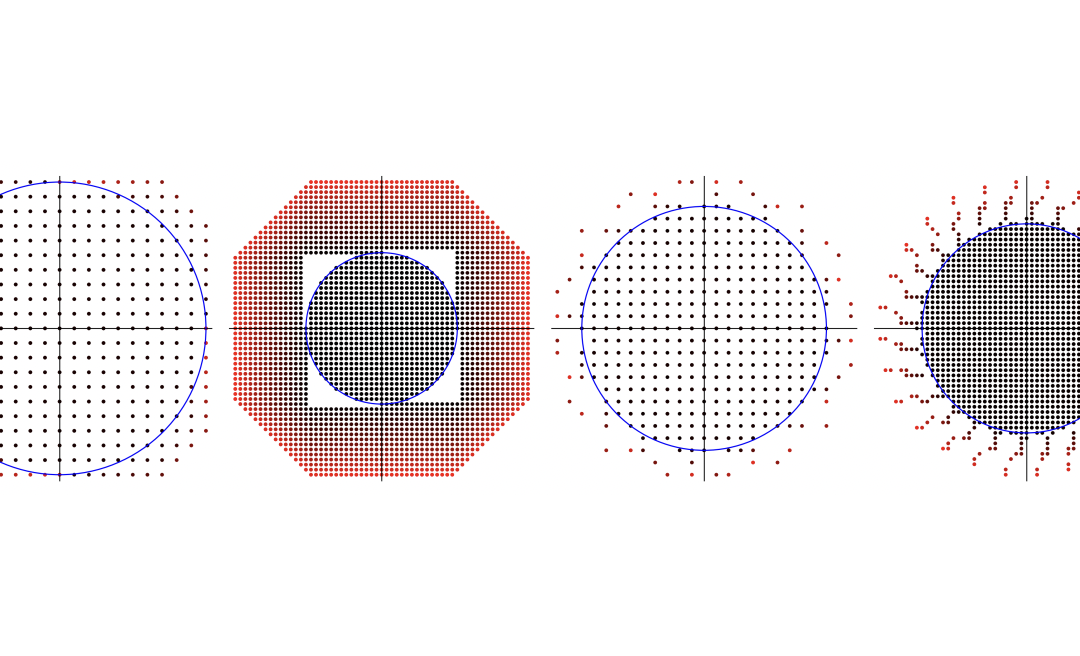

Classical verification techniques that aim at determining whether a system (model) satisfies a given specification (or not) are often unsatisfactory in practice. Perspicuous verification techniques require more light being shed on why the verification answer holds or not, and how much impact states, system components, or parameters have on the satisfaction or violation of properties. The goal of Project A12 is to provide a solid foundational base for such a perspicuity-aware analysis of dynamical systems, hybrid automata, and probabilistic models. This includes explications and certificates for verification results, various concepts to reason about robustness, quantitative responsibility ascription, and cause-effect relations in operational models.

A12 – Dynamical, Hybrid, and Probabilistic Systems: Verification & Explication