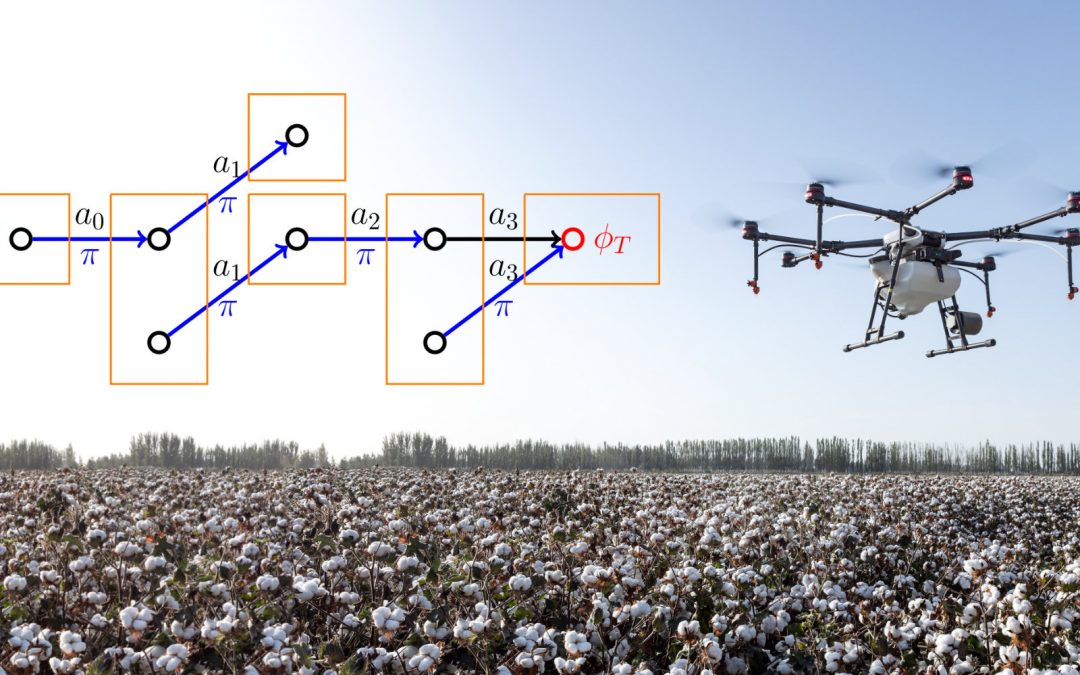

AI systems choosing actions in dynamic environments need to react to environment behaviour in real-time. Neural networks are rapidly gaining traction in tackling this, by learning a policy at design-time, so that at run-time, it suffices to call the policy to obtain the next action to execute. This form of run-time planning is fast, but it comes with severe safety and perspicuity issues. The vision of Project C6 is to address these problems through a range of methods relying on declarative models, or simulators, of the environment. This encompasses policy safeguarding – trying to avoid unsafe situations at run-time, verification and testing of policy behaviour, explication of policy decisions, policy-behaviour visualisation, and policy re-training leveraging information which is provided by all these techniques.

C6 – Fast, Safe, and Perspicuous Run-Time Planning